Software

|

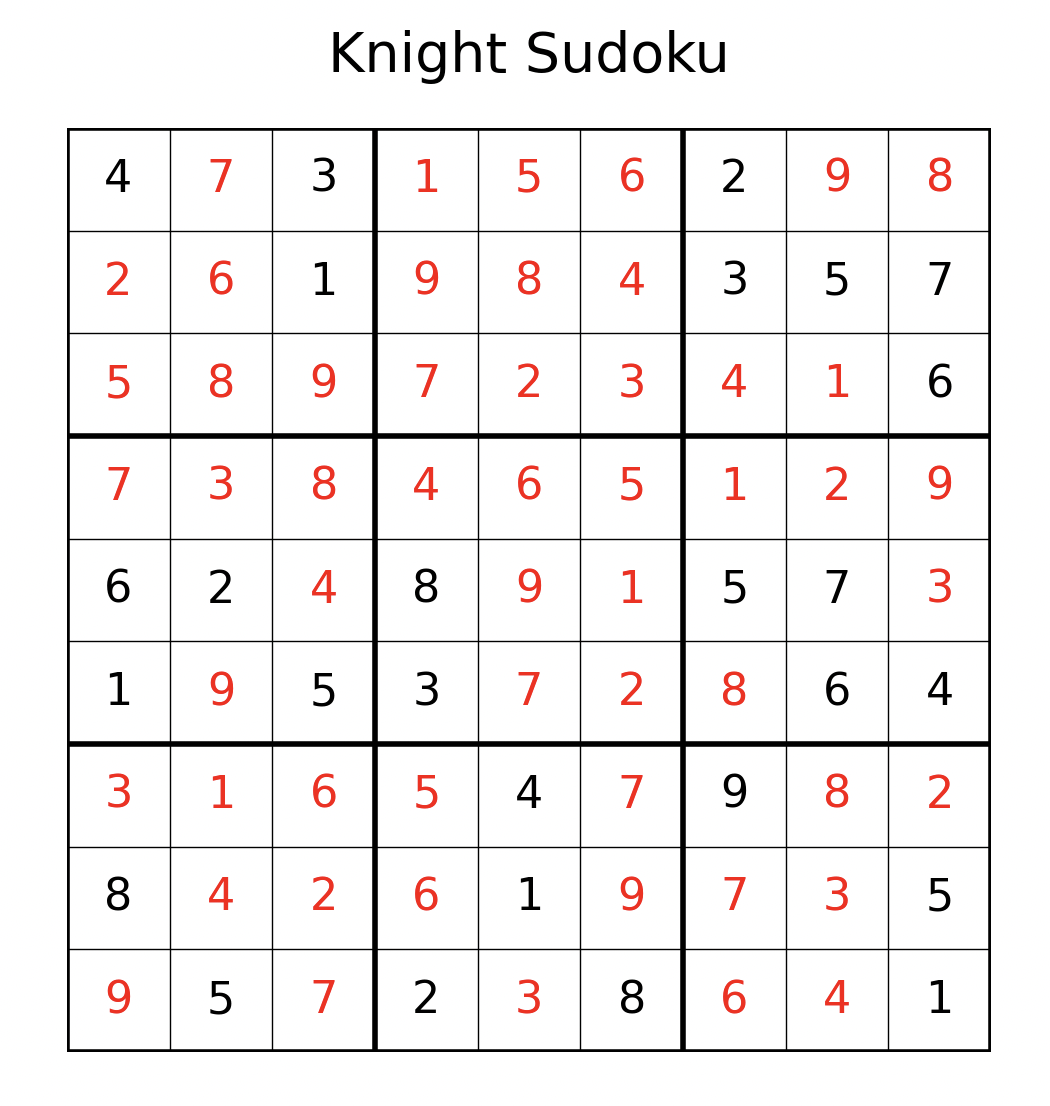

I really love puzzles, and whenever I have some time I like to think about how to generate and solve puzzles programmatically. My python package The Github repo is here. |

|

|

I am one of the contributors to |

|

|

The R package |

|

I had the privilege of working on versions 4.0 and 4.1 of The R package |

|

|

Feature-weighted elastic net ("fwelnet") is a variant of the elastic net which has feature-specific penalties. These penalties are based on additional information that the user has on the features (e.g. grouping information, expert views on the importance of the feature). This allows the model-fitting algorithm to leverage such external information to learn better predictive models. The R package |

|

Reluctant generalized additive models ("RGAM") are an extension of sparse generalized linear models (GLMs) which allow a model's prediction to vary non-linearly with an input variable. It is guided by the principle that, if all else is equal, one should prefer a linear feature over a non-linear feature. It is a multi-stage algorithm which scales well computationally, and works for quantitative, binary, count and survival data. The R package |

|

Principal components lasso ("pcLasso") is a new method for supervised learning which I worked on with Rob Tibshirani and Jerry Friedman. The method shrinks predictions toward the leading principal components of the feature matrix. This method is especially useful when the features come in groups, and it works for both overlapping or non-overlapping groups. The R package |

Other

|

I often find myself googling the same keywords over and over again, piecing together information across various sites to (re-)learn the derivation of or intuition behind statistical results. This blog is a way for me to pen down what I've learned as a form of knowledge retention, as well as to post short statistical tidbits that randomly pop into my head. |

|

|

This blog is just like Statistical Odds & Ends, except that the content is mathematical but non-statistical. |

|

|

I post math olympiad problems and brainteasers along with their solutions here. The goal is to bring students beyond reading complete proofs to understanding how the author thought of the solutions (and how they could have thought of it themselves). The blog is largely inactive but I still post there once in a while. |

|

|

I love to watch sports, especially international tournaments like the Olympics and the FIFA World Cup. For FIFA World Cup 2018, I compiled some basic statistics for the matches and countries, and did some basic data analyses. The data and analysis files are available on Github. |

|